Read Bad Pharma: How Drug Companies Mislead Doctors and Harm Patients Online

Authors: Ben Goldacre

Bad Pharma: How Drug Companies Mislead Doctors and Harm Patients (4 page)

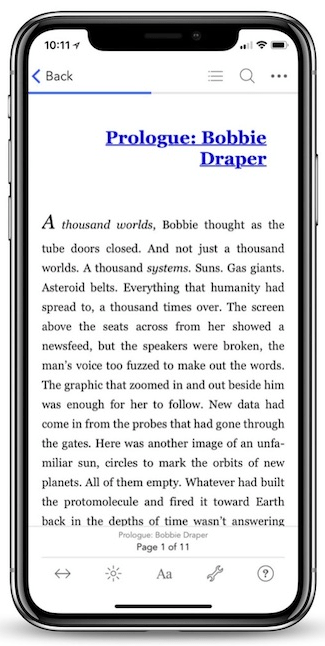

When you’ve got all the trial data in one place, you can conduct something called a meta-analysis, where you bring all the results together in one giant spreadsheet, pool all the data and get one single, summary figure, the most accurate summary of all the data on one clinical question. The output of this is called a ‘blobbogram’, and you can see one on the opposite page, in the logo of the Cochrane Collaboration, a global, non-profit academic organisation that has been producing gold-standard reviews of evidence on important questions in medicine since the 1980s.

This blobbogram shows the results of all the trials done on giving steroids to help premature babies survive. Each horizontal line is a trial: if that line is further to the left, then the trial showed steroids were beneficial and saved lives. The central, vertical line is the ‘line of no effect’: and if the horizontal line of the trial touches the line of no effect, then that trial showed no statistically significant benefit. Some trials are represented by longer horizontal lines: these were smaller trials, with fewer participants, which means they are prone to more error, so the estimate of the benefit has more uncertainty, and therefore the horizontal line is longer. Finally, the diamond at the bottom shows the ‘summary effect’: this is the overall benefit of the intervention, pooling together the results of all the individual trials. These are much narrower than the lines for individual trials, because the estimate is much more accurate: it is summarising the effect of the drug in many more patients. On this blobbogram you can see – because the diamond is a long way from the line of no effect – that giving steroids is hugely beneficial. In fact, it reduces the chances of a premature baby dying by almost half.

The amazing thing about this blobbogram is that it had to be invented, and this happened very late in medicine’s history. For many years we had all the information we needed to know that steroids saved lives, but nobody knew they were effective, because nobody did a systematic review until 1989. As a result, the treatment wasn’t given widely, and huge numbers of babies died unnecessarily; not because we didn’t have the information, but simply because we didn’t synthesise it together properly.

In case you think this is an isolated case, it’s worth examining exactly how broken medicine was until frighteningly recent times. The diagram on the opposite page contains two blobbo-grams, or ‘forest plots’, showing all the trials ever conducted to see whether giving streptokinase, a clot-busting drug, improves survival in patients who have had a heart attack.

11

Look first only at the forest plot on the following page. This is a conventional forest plot, from an academic journal, so it’s a little busier than the stylised one in the Cochrane logo. The principles, however, are exactly the same. Each horizontal line is a trial, and you can see that there is a hodgepodge of results, with some trials showing a benefit (they don’t touch the vertical line of no effect, headed ‘1’) and some showing no benefit (they do cross that line). At the bottom, however, you can see the summary effect – a dot on this old-fashioned blobbogram, rather than a diamond. And you can see very clearly that overall, streptokinase saves lives.

So what’s that on the right? It’s something called a cumulative meta-analysis. If you look at the list of studies on the left of the diagram, you can see that they are arranged in order of date. The cumulative meta-analysis on the right adds in each new trial’s results, as they arrived over history, to the previous trials’ results. This gives the best possible running estimate, each year, of how the evidence would have looked at that time, if anyone had bothered to do a meta-analysis on all the data available to them. From this cumulative blobbogram you can see that the horizontal lines, the ‘summary effects’, narrow over time as more and more data is collected, and the estimate of the overall benefit of this treatment becomes more accurate. You can also see that these horizontal lines stopped touching the vertical line of no effect a very long time ago – and crucially, they do so a long time before we started giving streptokinase to everyone with a heart attack.

In case you haven’t spotted it for yourself already – to be fair, the entire medical profession was slow to catch on – this chart has devastating implications. Heart attacks are an incredibly common cause of death. We had a treatment that worked, and we had all the information we needed to know that it worked, but once again we didn’t bring it together systematically to get that correct answer. Half of the people in those trials at the bottom of the blobbogram were randomly assigned to receive no streptokinase, I think unethically, because we had all the information we needed to know that streptokinase worked: they were deprived of effective treatments. But they weren’t alone, because so were most of the rest of the people in the world at the time.

These stories illustrate, I hope, why systematic reviews and meta-analyses are so important: we need to bring together

all

of the evidence on a question, not just cherry-pick the bits that we stumble upon, or intuitively like the look of. Mercifully the medical profession has come to recognise this over the past couple of decades, and systematic reviews with meta-analyses are now used almost universally, to ensure that we have the most accurate possible summary of all the trials that have been done on a particular medical question.

But these stories also demonstrate why missing trial results are so dangerous. If one researcher or doctor ‘cherry-picks’, when summarising the existing evidence, and looks only at the trials that support their hunch, then they can produce a misleading picture of the research. That is a problem for that one individual (and for anyone who is unwise or unlucky enough to be influenced by them). But if we are

all

missing the negative trials, the entire medical and academic community, around the world, then when we pool the evidence to get the best possible view of what works – as we must do – we are all completely misled. We get a misleading impression of the treatment’s effectiveness: we incorrectly exaggerate its benefits; or perhaps even find incorrectly that an intervention was beneficial, when in reality it did harm.

Now that you understand the importance of systematic reviews, you can see why missing data matters. But you can also appreciate that when I explain

how much

trial data is missing, I am giving you a clean overview of the literature, because I will be explaining that evidence using systematic reviews.

How much data is missing?

If you want to prove that trials have been left unpublished, you have an interesting problem: you need to prove the existence of studies you don’t have access to. To work around this, people have developed a simple approach: you identify a group of trials you know have been conducted and completed, then check to see if they have been published. Finding a list of completed trials is the tricky part of this job, and to achieve it people have used various strategies: trawling the lists of trials that have been approved by ethics committees (or ‘institutional review boards’ in the USA), for example; or chasing up the trials discussed by researchers at conferences.

In 2008 a group of researchers decided to check for publication of every trial that had ever been reported to the US Food and Drug Administration for all the antidepressants that came onto the market between 1987 and 2004. This was no small task. The FDA archives contain a reasonable amount of information on all the trials that were submitted to the regulator in order to get a licence for a new drug. But that’s not all the trials, by any means, because those conducted after the drug has come onto the market will not appear there; and the information that is provided by the FDA is hard to search, and often scanty. But it is an important subset of the trials, and more than enough for us to begin exploring how often trials go missing, and why. It’s also a representative slice of trials from all the major drug companies.

The researchers found seventy-four studies in total, representing 12,500 patients’ worth of data. Thirty-eight of these trials had positive results, and found that the new drug worked; thirty-six were negative. The results were therefore an even split between success and failure for the drugs, in reality. Then the researchers set about looking for these trials in the published academic literature, the material available to doctors and patients. This provided a very different picture. Thirty-seven of the positive trials – all but one – were published in full, often with much fanfare. But the trials with negative results had a very different fate: only three were published. Twenty-two were simply lost to history, never appearing anywhere other than in those dusty, disorganised, thin FDA files. The remaining eleven which had negative results in the FDA summaries did appear in the academic literature, but were written up as if the drug was a success. If you think this sounds absurd, I agree: we will see in Chapter 4, on ‘bad trials’, how a study’s results can be reworked and polished to distort and exaggerate its findings.

This was a remarkable piece of work, spread over twelve drugs from all the major manufacturers, with no stand-out bad guy. It very clearly exposed a broken system: in reality we have thirty-eight positive trials and thirty-seven negative ones; in the academic literature we have forty-eight positive trials and three negative ones. Take a moment to flip back and forth between those in your mind: ‘thirty-eight positive trials, thirty-seven negative’; or ‘forty-eight positive trials and only three negative’.

If we were talking about one single study, from one single group of researchers, who decided to delete half their results because they didn’t give the overall picture they wanted, then we would quite correctly call that act ‘research misconduct’. Yet somehow when exactly the same phenomenon occurs, but with whole studies going missing, by the hands of hundreds and thousands of individuals, spread around the world, in both the public and private sector, we accept it as a normal part of life.

12

It passes by, under the watchful eyes of regulators and professional bodies who do nothing, as routine, despite the undeniable impact it has on patients.

Even more strange is this: we’ve known about the problem of negative studies going missing for almost as long as people have been doing serious science.

This was first formally documented by a psychologist called Theodore Sterling in 1959.

13

He went through every paper published in the four big psychology journals of the time, and found that 286 out of 294 reported a statistically significant result. This, he explained, was plainly fishy: it couldn’t possibly be a fair representation of every study that had been conducted, because if we believed that, we’d have to believe that almost every theory ever tested by a psychologist in an experiment had turned out to be correct. If psychologists really were so great at predicting results, there’d hardly be any point in bothering to run experiments at all. In 1995, at the end of his career, the same researcher came back to the same question, half a lifetime later, and found that almost nothing had changed.

14

Sterling was the first to put these ideas into a formal academic context, but the basic truth had been recognised for many centuries. Francis Bacon explained in 1620 that we often mislead ourselves by only remembering the times something worked, and forgetting those when it didn’t.

15

Fowler in 1786 listed the cases he’d seen treated with arsenic, and pointed out that he could have glossed over the failures, as others might be tempted to do, but had included them.

16

To do otherwise, he explained, would have been misleading.

Yet it was only three decades ago that people started to realise that missing trials posed a serious problem for medicine. In 1980 Elina Hemminki found that almost half the trials conducted in the mid-1970s in Finland and Sweden had been left unpublished.

17

Then, in 1986, an American researcher called Robert Simes decided to investigate the trials on a new treatment for ovarian cancer. This was an important study, because it looked at a life-or-death question. Combination chemotherapy for this kind of cancer has very tough side effects, and knowing this, many researchers had hoped it might be better to give a single ‘alkylating agent’ drug first, before moving on to full chemotherapy. Simes looked at all the trials published on this question in the academic literature, read by doctors and academics. From this, giving a single drug first looked like a great idea: women with advanced ovarian cancer (which is not a good diagnosis to have) who were on the alkylating agent alone were significantly more likely to survive longer.

Then Simes had a smart idea. He knew that sometimes trials can go unpublished, and he had heard that papers with less ‘exciting’ results are the most likely to go missing. To prove that this has happened, though, is a tricky business: you need to find a fair, representative sample of all the trials that have been conducted, and then compare their results with the smaller pool of trials that have been published, to see if there are any embarrassing differences. There was no easy way to get this information from the medicines regulator (we will discuss this problem in some detail later), so instead he went to the International Cancer Research Data Bank. This contained a register of interesting trials that were happening in the USA, including most of the ones funded by the government, and many others from around the world. It was by no means a complete list, but it did have one crucial feature: the trials were registered before their results came in, so any list compiled from this source would be, if not complete, at least a representative sample of all the research that had ever been done, and not biased by whether their results were positive or negative.

When Simes compared the results of the published trials against the pre-registered trials, the results were disturbing. Looking at the academic literature – the studies that researchers and journal editors chose to publish – alkylating agents alone looked like a great idea, reducing the rate of death from advanced ovarian cancer significantly. But when you looked only at the pre-registered trials – the unbiased, fair sample of all the trials ever conducted – the new treatment was no better than old-fashioned chemotherapy.